This is a living document that is continually updated. Last updated December 2024.

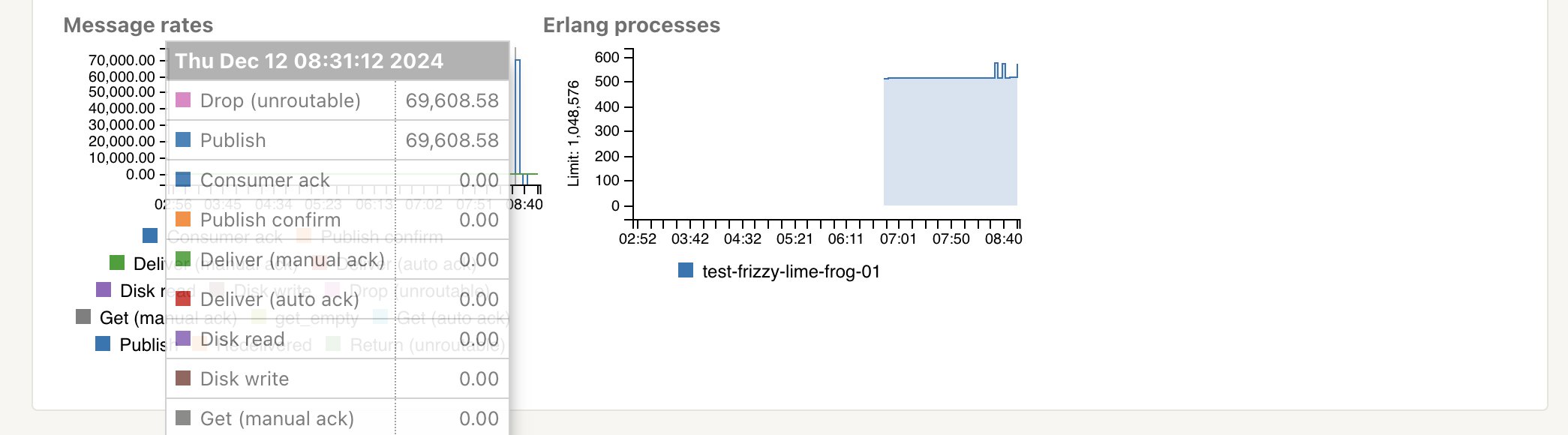

We have over the years seen different reasons for crashed or unresponsive CloudAMQP/RabbitMQ servers. Too many queued messages, high message rate during a long time or frequently opened and closed connections have been the most common reasons for high CPU usage. To protect against this we have made some tools available that help address performance issues promptly and automatically before they impact your business.

First of all - we recommend users on dedicated plans enable CPU and memory alarms in the control panel. These alarms notify you when CPU or memory usage reaches or exceeds the set threshold for a certain duration. The default threshold for both CPU and memory is 90%, but you can adjust it anytime. Read more about our alarms in the monitoring pages.

Troubleshooting RabbitMQ

General tips and tools to keep in mind once you start troubleshooting your RabbitMQ server.

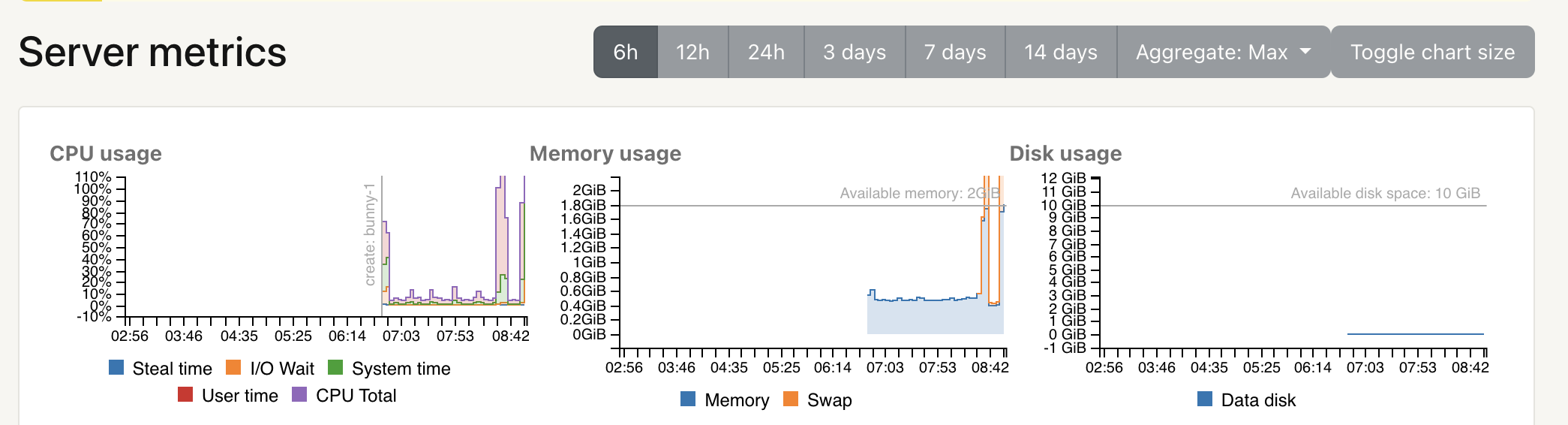

Server Metrics

Server Metrics will show performance metrics from your server. CloudAMQP shows CPU Usage Monitoring, Memory Usage Monitoring, and more. Do you see any CPU or memory spikes? Do you notice some changes in the graph during a specific time? Did you do any changes in your code around that time?

RabbitMQ Management interface

If you are able to open the RabbitMQ management interface - do that and check that everything is looking normal; Everything from number of queues to number of channels to messages in your queues.

RabbitMQ Logs

You can download logs for all nodes in your cluster via the

/logs

endpoint. Do you see new unknown errors in your log?

Most common errors/mistakes

Here follows a list of the most common errors/mistakes we have seen on misbehaving servers along with our thoughts on potential root causes and recommendations for preventing/fixing the incident:

- Too many queued messages

- Too high message throughput

- Too many queues

- Frequently opening and closing connections

- Connection or channel leak

1. Too many queued messages

Short queues are fastest; when a queue is empty, and it has consumers ready to receive messages, as soon as a message is received by the queue it goes straight out to the consumer.

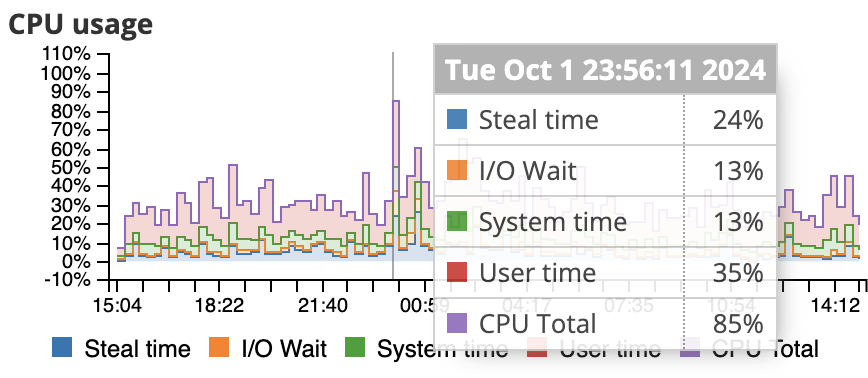

In older RabbitMQ versions, classic queues buffered most messages in memory. This caused issues when such queues receive messages at a faster rate than the consumers can handle — the queue would eventually grow too large resulting in a high RAM usage When this happens, RabbitMQ would need to page messages to disk in order to free up RAM. Consequently, the CPU cost per message will be much higher than when the queue was empty. CPU I/O Wait will also increase, which indicates the percentage of time the CPU has to wait on the disk. Good news: with RabbitMQ 3.10, Classic Queues v2 were introduced, and their default behavior is to write most messages directly to disk, keeping only a small portion in memory. From RabbitMQ 3.12 onward, this behavior applies to both Classic Queues v1 and v2, minimizing the risk of high memory usage. If your cluster is experiencing high RAM and/or CPU usage due to too many messages, here are somethings to look out for.

- For optimal performance, queues should always stay around 0.

- Are you running an older version of RabbitMQ? If so, we strongly recommend upgrading to RabbitMQ 3.13 or later and switching to Classic Queues v2, as they offer lower memory and CPU usage, lower latency, and higher throughput. You can upgrade the version from the console (next to where you reboot/restart the instance). If upgrading isn’t an option and you're running RabbitMQ older than 3.10, you cannot use Classic Queues v2. Alternatively, consider enabling lazy queues(available from RabbitMQ v3.6). With lazy queues, the messages go straight to disk and thereby the RAM usage is minimized. A potential drawback with lazy queues is that the I/O to the disk will increase. Read more about lazy queues in Stopping the stampeding herd problem with lazy queues.

- Are your consumers connected? You should first of all check if your consumers are connected - consumer alarms can be activated from the console. The alarms will be triggered to send notifications when the number of consumers for a queue is less than or equal to a given number of consumers.

- Too many unacknowledged messages: All unacknowledged messages have to reside in RAM on the servers. If you have too many unacknowledged messages you will run out of RAM. An efficient way to limit unacknowledged messages is to limit how many messages your clients prefetch. Read more about consumer prefetch on RabbitMQ.com: Consumer Prefetch.

💡 Generally, we recommend you to enable the queue length alarm from the console or, if possible, to set a max-length or a max-ttl on messages in your queues.

If you already have too many messages in the queue - you need to start consuming more messages or get your queues drained. If you are able to turn off your publishers then do that until you have managed to consume the messages from the queue.

2. Too high message throughput

CPU User time indicates the percentage of time your program spends executing instructions in the CPU - in this case, the time the CPU spent running RabbitMQ. If CPU User time is high, it could be due to high message throughput.

Dedicated plans are not artificially limited in any way, the maximum performance is determined by the underlying instance type. Every plan has a given Max msgs/s, that is the approximate burst speed we have measured during benchmark testing. Burst speed means that you temporarily can enjoy the highest speed but after a certain amount of time or once the quota has been exceeded, you might automatically be knocked down to a slower speed.

Most of our benchmark tests are done with RabbitMQ PerfTest tool with one queue, one publisher and one customer. Durable queues/exchanges have been used and transient messages with default message size have been used. There are a lot of other factors too that plays in, like the type of routing, if you ack or auto-ack, datacenter, if you publish with confirmation or not etc. If you, for example, are publishing large persistent messages, it will result in a lot of I/O wait time for the CPU. Read more about Load testing and performance measurements in RabbitMQ. You need to run your own tests to ensure the perfomance on a given plan.

If your cluster is experiencing high CPU usage, here are some action points.

- Decrease the average load or upgrade/migrate: When you have too high message throughput you should either try to decrease the average load, or upgrade/migrate to a larger plan. If you want to rescale your cluster, go to the CloudAMQP Control Panel, and choose edit for the instance you want to reconfigure. Here you have the ability to add or remove nodes, and change plan altogether. More about migration can be found in: Migrate between plans

- Python Celery: CloudAMQP has long advised against using mingling, gossip, and heartbeats with Python Celery. Why? Mainly because of the flood of unnecessary messages they introduce. You can disable it by adding --without-gossip --without-mingle --without-heartbeat to the Celery worker command line arguments and add CELERY_SEND_EVENTS = False to your settings.py. Take a look at our Celery documentation for up to date information about Celery Settings.

3. Too many queues

RabbitMQ can handle a lot of queues, but each queue will, of course, require some resources. CloudAMQP plans are not artificially limited in any way, the maximum performance is determined by the underlying instance type and so are the number of queues. Too many queues will also use a lot of resources in the stats/mgmt db. If the queues suddenly start to pile up, you might have a queue leak. If you can't find the leakage you can add a queue-ttl policy. The cluster will clean up it self for "dead-queues" - old queues will automatically be removed. You can read more about TTL at RabbitMQ.com: Time-To-Live and Expiration

- Python Celery: If you use Python Celery, make sure that you either consume the results or disable the result backend (set CELERY_RESULT_BACKEND = None).

- Connections and channels: Each connection uses at least one hundred kB, even more if TLS is used.

4. Frequently opening and closing connections

If CPU System time is high, you should check how you are handling your connections.

RabbitMQ connections are a lot more resource heavy than channels. Connections should be long lived, and channels can be opened and closed more frequently. Best practise is to reuse connections and multiplex a connection between threads with channels - in other words, if you have as many connections as channels you might need to take a look at your architecture. You should ideally only have one connection per process, and then use a channel per thread in your application.

If you really need many connections - the CloudAMQP team can configure the TCP buffer sizes to accommodate more connections for you. Send us an email when you have created your instance and we will configure the changes.

If you are using a client library that can't keep connections long lived, we recommend using our AMQP-proxy. The proxy will pool connections and channels so that the connection to the server is long lived. Read more about the proxy here: AMQProxy.

- Number of channels per connection: It is hard to give a general best number of channels per connection - it all depends on your application. Generally the number of channels should be larger, but not much larger than number of connections. On our shared clusters (little lemur and tough tiger) we have a limit of 200 channels per connections.

5. Connection or channel leak

If the number of channels or connections gets out of control, it is probably due to a channel or connection leak in your client code. Try to find the reason for the leakage and make sure that you close channels when you don't use them anymore. An alarm can be activated from the control panel of your instance. This alarm will trigger when the number of connections gets over a specified threshold.

We value your feedback and welcome any comments you may have!

This is a list of the most common errors we have seen during years - let us know if we missed something obvious that often has happened to your servers. As always, please email us at contact@cloudamqp.com if you have any suggestions or feedback.